Matrix Operations

Section 2.9 { Dimension, Rank, and Coordinates

Dimension

Exercises 27 and 28 in section 2.9 of the text show the following important

fact.

every basis for a subspace H has the same number of vectors.

Definition. The dimension of a nonzero subspace H (written as

"dim H") is

the number of vectors in any basis for H. The dimension of the zero subspace

is defined to be zero, and that subspace actually has no basis. (Why?)

is defined to be zero, and that subspace actually has no basis. (Why?)

The space Rn has dimension n. Every basis for Rn contains n vectors. A plane

through the origin in R3 is two-dimensional, and a line through the origin is

one-

dimensional. (A plane which doesn't go through the origin is not a subspace,

and so it doesn't have a basis or a dimension.)

Definition. The rank of a matrix A (written as "rank A") is the dimension

of the column space of A, i.e. dim Col A.

Notes. The pivot columns of A form a basis for Col A, so the rank of A is the

number of pivot columns. Each column of A without a pivot represents a free

variable in

, and will be associated with one vector in a basis for Nul

A.

, and will be associated with one vector in a basis for Nul

A.

So dim Nul A is the number of columns without pivots.

Theorem 14: (Rank Theorem). If a matrix has n columns, then

Theorem 15:(Basis Theorem). Let H be a p-dimensional subspace of Rn.

Then any linearly independent set of exactly p vectors in H spans H (and is

therefore a basis for H). Also, any set of p vectors which spans H is linearly

independent (and is therefore a basis for H). (Proved in section 4.5 of the

text.)

Also, look over the continued pieces of the Invertible Matrix Theorem (on page

179), and ask about any which are not clear. This theorem, which began

in section 2.3, now has 18 statements about invertible matrices, which are all

equivalent ! And it's very important to note, these 18 statements are only equiv-

alent for square matrices . If A is not square, then e.g. parts (a) and (j) are

not

equivalent (and many other parts break as well).

Coordinates

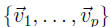

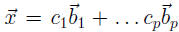

Reminder Given a set of vectors

,

can we write another vector

,

can we write another vector

as a linear combination of the given set?

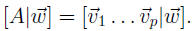

To answer this question, row reduce the augmented matrix

.

.

If the system is consistent , the answer is "yes," otherwise "no." A

solution

to

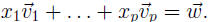

gives coefficients

x 1, . . . , xp satisfying

gives coefficients

x 1, . . . , xp satisfying

If the matrix A is invertible, there will be a unique way to write

as a

linear

as a

linear

combination of

. In fact, the coefficients will be

. In fact, the coefficients will be

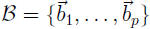

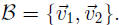

Say we have a set

which is a basis for some subspace H.

Given

which is a basis for some subspace H.

Given

any vector

, we can write

, we can write

as a unique linear combination of the vectors

as a unique linear combination of the vectors

in

,

let's say

,

let's say . This vector of coefficients

. This vector of coefficients

is called

the coordinate vector of

is called

the coordinate vector of

relative to

relative to

, or the

, or the

-coordinate vector

-coordinate vector

of

,

and is written as

,

and is written as

.

Note that the order of the vectors in

.

Note that the order of the vectors in

matters, changing their order would change .

.

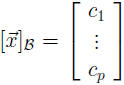

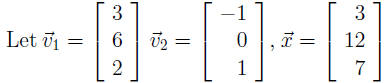

Example 1 (from textbook)

,

and

,

and

Let H = Span

Let H = Span .

.

Here,

is actually a basis for H because the vectors in

is actually a basis for H because the vectors in

are linearly indepen-

are linearly indepen-

dent, and by definition they span H.

Determine whether

is in H, and find its B-coordinates if it is.

is in H, and find its B-coordinates if it is.

If

is in H, then the following vector equation is consistent .

is in H, then the following vector equation is consistent .

=

The scalars c1 and c2, if they exist, are the

-coordinates of

-coordinates of

. Row reduction

. Row reduction

gives

and so

A More General Example

Consider the "vector space" V of all 3 × 3 matrices. See section 4.1 for details

about vector

spaces in general, we won't worry about all of the details for now. But

basically, a vector space

is a collection of "objects" satisfying some basic properties . We call these

objects "vectors"

even though they may not be vectors in Rn as we are accustomed to. They may be

matrices,

polynomials , some other types of functions, etc.

Subspace

Now think about H, the subset of V consisting of all symmetric matrices

satisfying AT = A.

Note that in a symmetric matrix,

In fact, H is a subspace of V . The

three properties

In fact, H is a subspace of V . The

three properties

hold.

(i) The zero "vector" from V is in H. But now we are thinking about the vector

space V , so

the zero element in V is really the 3 × 3 matrix containing all zeros.

(ii) The sum of two symmetric matrices is symmetric. So if we have two "vectors"

in H, their

sum is in H.

(iii) A symmetric matrix multiplied by a constant is still symmetric. So any

constant times a

"vector" in H is still in H.

Basis

A basis for H would be a set of linearly independent "vectors" from H which span

H. A

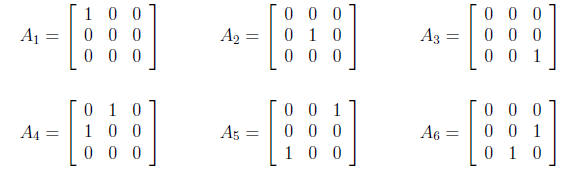

"vector" in H is a symmetric 3 × 3 matrix. Consider the following six

"vectors" from H.

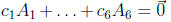

First, I'll verify that these six matrices are linearly independent, by thinking

about the equation

(where remember that this vector

(where remember that this vector

is really the zero

vector in V , i.e. the

is really the zero

vector in V , i.e. the

3 × 3 matrix of zeros).

The only way that matrix will be the zero matrix is if

each of c1, . . . , c6

are all zero. So the

only linear combination of {A1, . . . ,A6} which gives zero is the trivial

linear combination, so

this set of "vectors" {A1, . . . ,A6} is linearly independent.

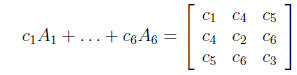

Next, any symmetric 3×3 matrix (i.e. a matrix satisfying

) is of the

form given below

) is of the

form given below

(first I write a completely general 3 × 3 matrix, and then rewrite it imposing

the symmetry

constraint).

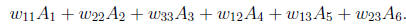

The symmetric matrix above can be written as the linear combination

So in fact the set {A1, . . . ,A6} spans H. Since the set is also linearly

independent, it is a basis

for H.

Coordinates

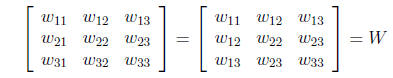

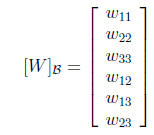

Given the basis B = {A1,A2,A3,A4,A5,A6}, the coordinates of the general

symmetric matrix

W given above are

Part of the real power of coordinates is that they let us take problems in more

abstract spaces,

and turn them back into problems using "regular" vectors in Rn and matrices that

we've been

studying.

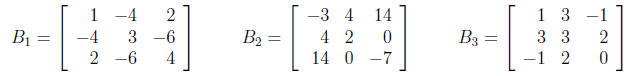

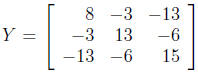

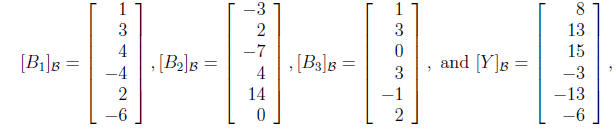

For example, given the three symmetric matrices in H

can we write the matrix

as a linear combination of B1,B2, and B3?

as a linear combination of B1,B2, and B3?

If so, how?

Looking back at how we wrote the B-coordinates of a general symmetric matrix W,

we can see

that

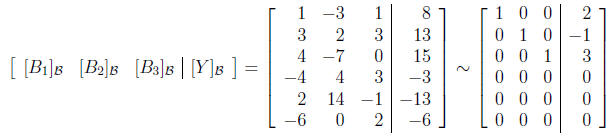

Then, the question "can we write Y as a linear combination of B1,B2, and B3" is

the same

as asking "can we write [Y ]B as a linear combination of [B1]B,

[B2]B, and

[B3]B?" We would

answer that question by row-reducing the augmented matrix.

This system is consistent and has a unique solution x 1 = 2, x2 =

-1, and x3 = 3.

So yes,

[Y ]B can be written as the linear combination [Y ]B = 2[B1]B -[B2]B +3[B3]B,

and so Y can be

written as the linear combination

Y = 2B1 - B2 + 3B3.

Similarly, any of the things we've done so far with vectors can be done within

this space of

3 × 3 matrices.

E.g. asking if B1, B2, and B3 are linearly independent can be done by checking

whether the

three vectors [B1]B, [B2]B, and [B3]B are linearly independent. That is, put

those vectors into

the columns of a matrix and row reduce, and check whether every column has a

pivot. If so,

then B1,B2, and B3 are linearly independent. If any column has no pivot, they

are linearly

dependent.

| Prev | Next |